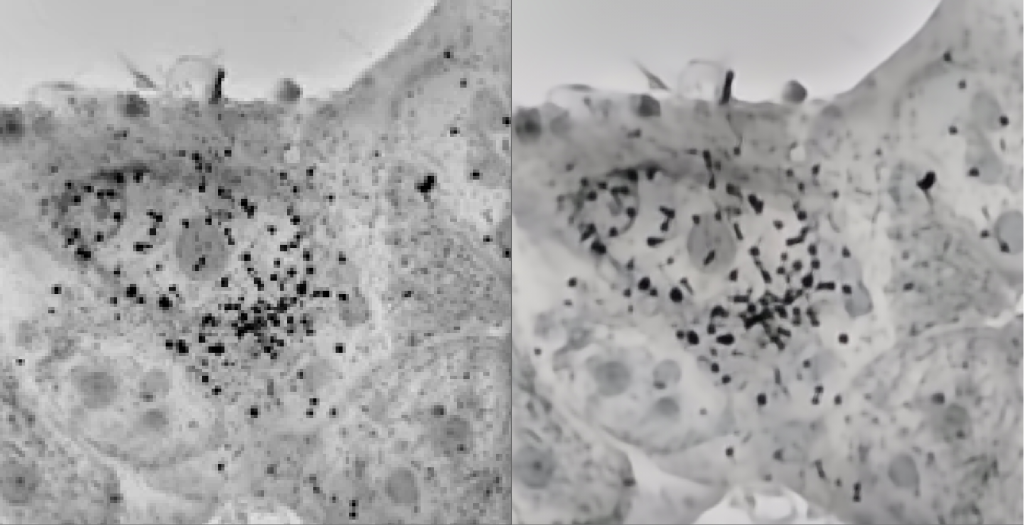

Transformative Artificial Intelligence Predictive Equations is dedicated to developing groundbreaking technology that revolutionizes data processing from visual to audio formats. This cutting-edge advancement represents a paradigm shift in the field of artificial intelligence, bridging the gap between the present and the future, transforming the future with tomorrow’s AI, today. However, it is important to understand what transformative AI truly means. When researching this term online, one encounters numerous AI systems claiming to be transformative. Unfortunately, many of them merely use the term as a buzzword to highlight their impact on a particular industry, which while may be profound in its own right also make it challenging to identify AI models that genuinely employ transformative deep learning techniques. To clarify the concept of transformative AI, it is essential to distinguish what it is not. Tasks like deblurring or super-resolution, which transformative AI can address, can also be accomplished using generative techniques. These generative techniques are similar, if not identical, to those found in existing AI software such as ChatGPT, ESRGAN, Midjourney, and others. Therefore, in this context, it becomes crucial to discern the characteristics of transformative AI and differentiate them from generative approaches. So in this setting what is transformative AI, what is generative? Tasks: Let us start with a similar task such as that mentioned above – Image super-resolution, or upscaling, where one takes an input image dimensions and increase them, with the AI techniques focused on either reproducing, enhancing the output larger image. Generative AI refers to techniques that aim to generate new data that resembles a specific distribution or training set. It involves modeling the underlying patterns and structures in the training data and using that model to generate new samples that have similar characteristics. Generative AI can be used for tasks like image generation, text generation, or even music composition. In the context of upscaling a generative method would analyze the surrounding pixel values and use them to create new pixel values that fit the desired upscale resolution. Transformative AI, on the other hand, focuses on transforming existing data or making changes to it while preserving the underlying distribution or characteristics of the original data. It aims to modify the input in a meaningful way without fundamentally altering its statistical properties. In the case of upscaling, a transformative approach would retain the existing pixel values and not introduce any new values. The main difference between generative AI and transformative AI lies in their objectives. Generative AI aims to generate new data that resembles the training set or a specific distribution, while transformative AI focuses on making meaningful modifications to existing data without fundamentally changing its distribution or characteristics. If we take another task such as deblur for faces, we find a common problem is that generative AI approaches for deblurring can produce visually sharp images, but introduce unintended distortions or alter facial features. Often, it is not even the same face from the input that ends up in the output. Transformative AI methods, on the other hand, are more likely to retain the original appearance and preserve facial details during the deblurring process. Why is Generative Ai more known than Transformative? Generative AI, especially deep learning models like generative adversarial networks (GANs) and variational autoencoders (VAEs), gained significant attention in recent years due to their ability to generate new and creative outputs. As a result, there has been a greater emphasis on generative models in research, leading to more publications and discussions around them. Popular creative based applications- Generative AI has gained popularity in areas such as image synthesis, text generation, and style transfer, which often produce visually striking or attention-grabbing results. These applications tend to attract more attention from the general public and media, leading to increased visibility. There is also a general perception of transformative AI as “less exciting”: Transformative AI, by its nature, focuses on preserving and improving existing data or content. While it plays a crucial role in tasks like image enhancement that can save companies millions, such as denoising, and deblurring QA data, the outputs may not have the same novelty or eye-catching appeal as generative AI, which can create entirely new and imaginative content. This perception may contribute to transformative AI receiving less attention in popular discussions, even while providing radically more useful applications for industrial, commercial and real world use cases. While transformative AI may not always receive as much public attention, it remains a critical aspect of AI research and development, and is employed in fields like medical imaging, surveillance, and forensic analysis- which may not be as publicly visible but are still significant in their respective domain. Contrast this with Generative AI which has found applications in areas like creative design, entertainment, and advertising, where the ability to generate new and unique content is highly valued. Transformative based AI applications contribute to improving existing data and enhancing various real-world tasks, even if they may not be as widely recognized outside specific domains or academic circles. Limitations of Generative Technology vs Transformative: Concerning generative Ai it is not only at the macro level that new details or pixels are generated, but that because of the hallucinatory method there is no true way to know how the AI reached those values in the specific instant. (in which case it isn’t just image manipulation too far from ground truth limiting practicality, but the irreproducibility in the methodology as well). Let us take two different potential applications, that of manufacturing and legal forensics: In manufacturing, precision and accuracy are crucial. Generative AI’s ability to hallucinate or create new details and values introduces uncertainty into the process. When generating new data or values, there is a risk of introducing errors or inconsistencies that could impact the quality or functionality of manufactured products. The lack of control over the exact methodology used by the AI to reach those values makes it difficult to understand or reproduce the process, which further hampers the reliability and reproducibility needed in manufacturing settings. In legal forensics, maintaining